If you use Xero and Google Sheets on a daily basis, this automation tutorial is for you. In this post, you’ll learn how to automatically sync your Xero bills into a Google Sheet on a schedule, so your spending table stays up to date without copy-pasting.

We’ll build it in a production-friendly way: OAuth2 authorization (so Xero can securely grant access), safe credential storage in Apps Script, a sync function that writes into your sheet, and refresh tokens (via offline_access) so the automation keeps running long-term.

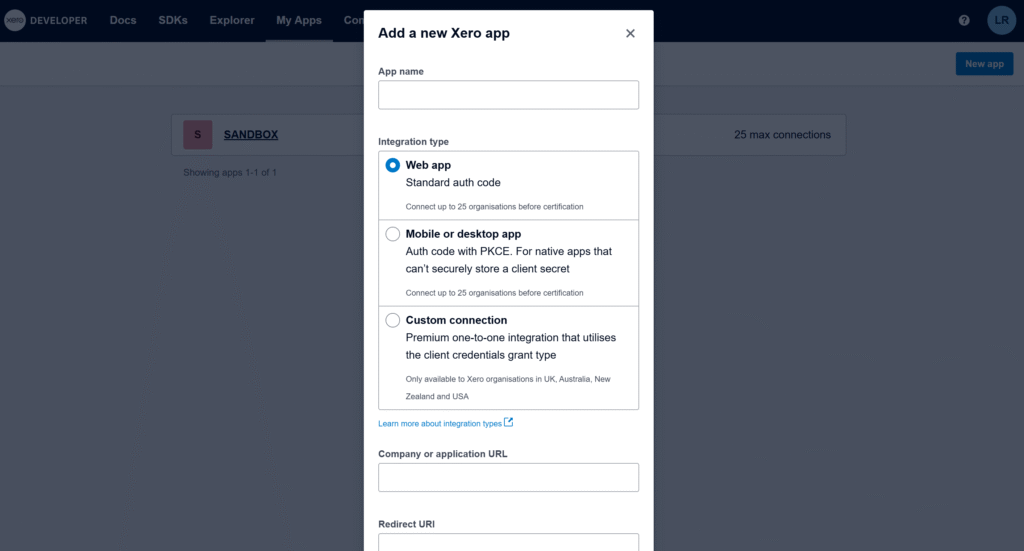

Create a Xero OAuth2 app

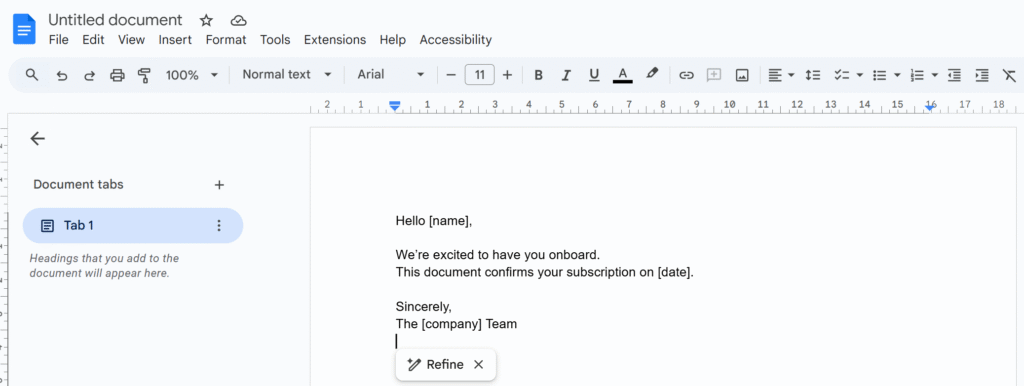

Create a Xero OAuth2 app in the Xero Developer portal and choose the web app / Standard auth code type (the standard flow Xero documents for third-party apps). The standard authorization code flow

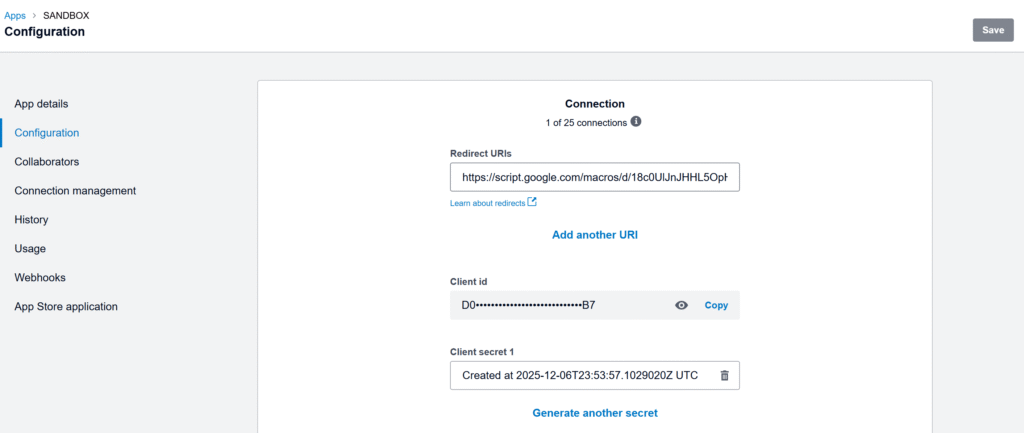

The most important field is Redirect URI. Xero requires an exact, absolute redirect URI (no wildcards), so we will need the exact callback URL generated by your Apps Script project. Xero API – OAuth 2.0

Get a temporary redirect URI to create the app

In Apps Script, the OAuth2 library can generate the exact redirect URI for this project. Run the function below, copy the URL from the logs, and paste it into your Xero app settings to finish creating the app.

/**

* Logs the Redirect URI you must paste into your Xero app settings.

* This does NOT require Client ID/Secret.

*

* @returns {void}

*/

function logXeroRedirectUri() {

const service = OAuth2.createService('Xero')

.setCallbackFunction('xeroAuthCallback');

Logger.log(service.getRedirectUri());

}

/**

* OAuth callback endpoint (stub is fine for this step).

* @param {Object} e

* @returns {GoogleAppsScript.HTML.HtmlOutput}

*/

function xeroAuthCallback(e) {

return HtmlService.createHtmlOutput('Callback ready.');

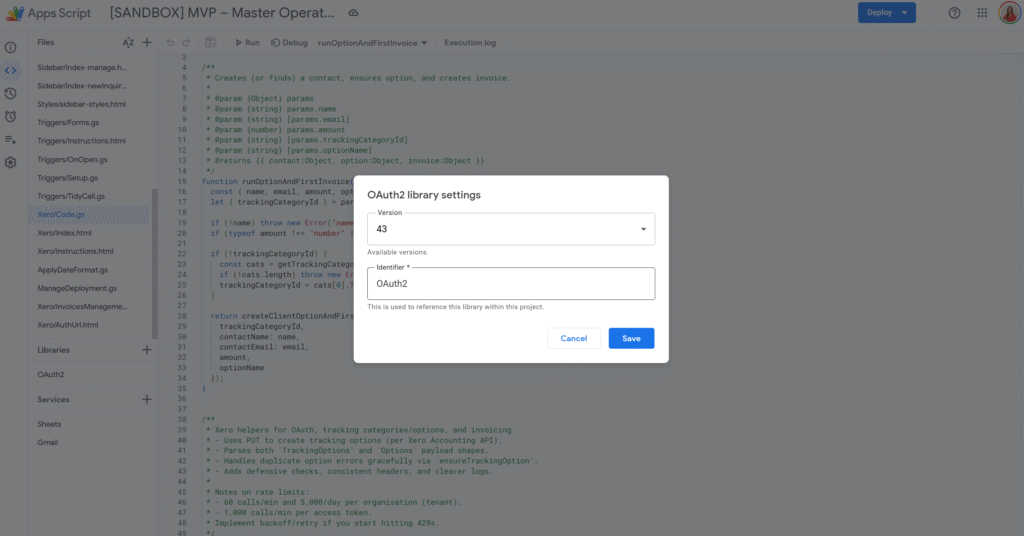

}The Apps Script OAuth2 library is the standard way to handle OAuth consent and token refresh in Apps Script. (Link to the setup guide here).

Get and store Client ID / Client Secret in Script Properties

Once the app is created, copy the Client ID and Client Secret. You’ll need them in Apps Script to complete the OAuth flow.

Store only the Client ID and Client Secret in Script Properties. OAuth tokens are user-specific, so we store them in User Properties via the OAuth2 library’s property store.

const XERO_PROPS_ = {

clientId: 'XERO_CLIENT_ID',

clientSecret: 'XERO_CLIENT_SECRET',

lastSyncIso: 'XERO_BILLS_LAST_SYNC_ISO',

};

/**

* Saves Xero app credentials to Script Properties.

* @param {string} clientId

* @param {string} clientSecret

* @returns {void}

*/

function saveXeroCredentials_(clientId, clientSecret) {

PropertiesService.getScriptProperties()

.setProperty(XERO_PROPS_.clientId, clientId)

.setProperty(XERO_PROPS_.clientSecret, clientSecret);

}

/**

* Reads Xero app credentials from Script Properties.

* @returns {{clientId: string, clientSecret: string}}

*/

function getXeroCredentials_() {

const props = PropertiesService.getScriptProperties();

const clientId = props.getProperty(XERO_PROPS_.clientId) || '';

const clientSecret = props.getProperty(XERO_PROPS_.clientSecret) || '';

if (!clientId || !clientSecret) throw new Error('Missing Xero credentials in Script Properties.');

return { clientId, clientSecret };

}Replace the temporary callback with the real OAuth service + callback

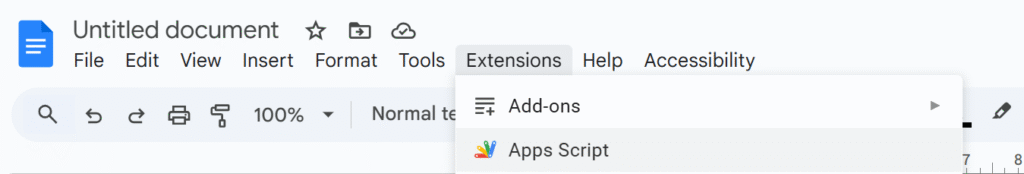

Now that we have Client ID/Secret stored, we can build the real OAuth service and the real callback that stores tokens.

Important: tokens are user-specific, so we store them in User Properties via

.setPropertyStore(PropertiesService.getUserProperties()).

Let’s replace the previous xeroAuthCallback() with this version:

/**

* Creates and returns the OAuth2 service configured for Xero.

* Client ID/Secret are in Script Properties; OAuth tokens are stored per-user in User Properties.

*

* @returns {OAuth2.Service}

*/

function getXeroService_() {

const { clientId, clientSecret } = getXeroCredentials_();

return OAuth2.createService('Xero')

.setAuthorizationBaseUrl('https://login.xero.com/identity/connect/authorize')

.setTokenUrl('https://identity.xero.com/connect/token')

.setClientId(clientId)

.setClientSecret(clientSecret)

.setCallbackFunction('xeroAuthCallback')

.setPropertyStore(PropertiesService.getUserProperties())

.setCache(CacheService.getUserCache())

.setLock(LockService.getUserLock())

.setScope([

'openid',

'profile',

'email',

'offline_access',

'accounting.transactions',

].join(' '))

.setTokenHeaders({

Authorization: 'Basic ' + Utilities.base64Encode(`${clientId}:${clientSecret}`),

});

}

/**

* OAuth callback endpoint called by Xero after consent.

* This stores the access token + refresh token in the OAuth2 property store.

*

* @param {Object} e

* @returns {GoogleAppsScript.HTML.HtmlOutput}

*/

function xeroAuthCallback(e) {

const service = getXeroService_();

const ok = service.handleCallback(e);

return HtmlService.createHtmlOutput(ok ? 'Success! You can close this tab.' : 'Denied');

}Authorize once

This is a one-time consent step: generate the authorization URL, open it in your browser, click Allow, then Xero redirects back to xeroAuthCallback() to finalize the connection.

You must request

offline_accessto receive a refresh token. Xero Developer – Offline access

Xero’s docs also note refresh tokens expire after a period (so if the integration stops running for too long, you may need to re-authorize).

/**

* Returns the Xero consent screen URL. Open it and approve access.

*

* @returns {string}

*/

function getXeroAuthorizationUrl_() {

const service = getXeroService_();

return service.getAuthorizationUrl();

}

/**

* Logs the authorization URL in Apps Script logs.

*

* @returns {void}

*/

function logXeroAuthorizationUrl_() {

Logger.log(getXeroAuthorizationUrl_());

}Run logXeroAuthorizationUrl_(), copy the logged URL, open it, and approve access. After that, your script can call Xero without prompting again.

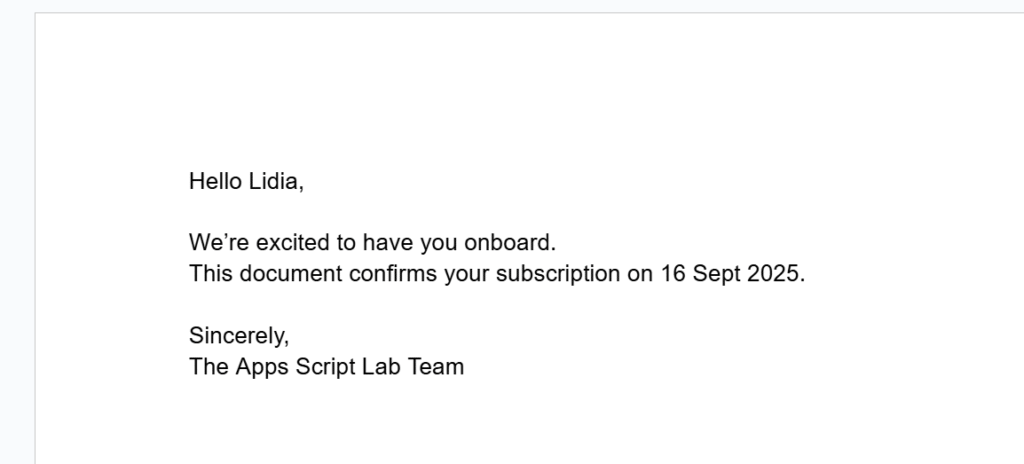

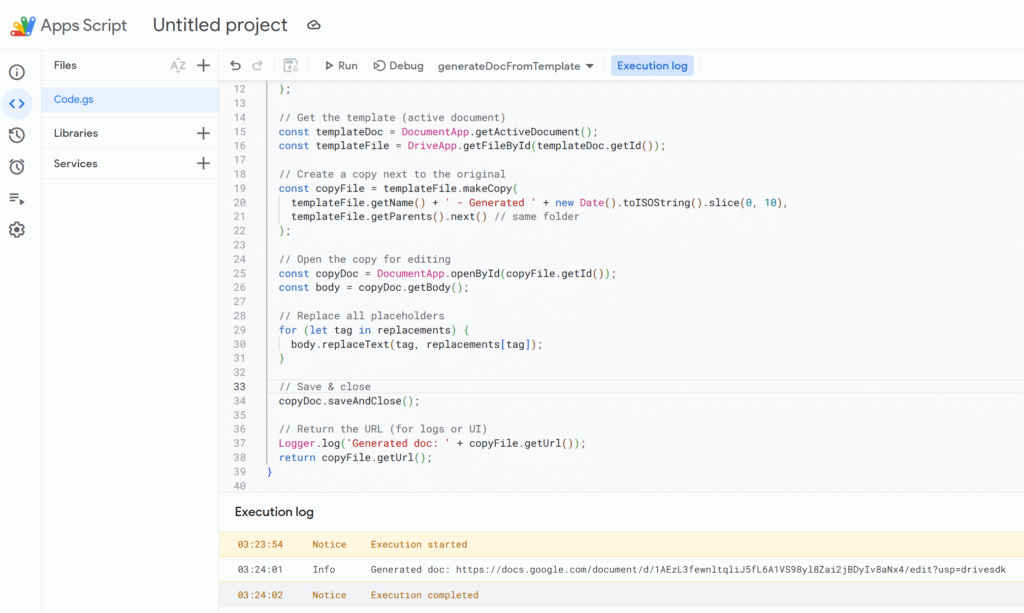

Sync bills into Sheets (then add a trigger)

Xero is multi-tenant: first call the Connections endpoint to get a tenantId, then include xero-tenant-id in every API request. For incremental sync, use the If-Modified-Since header so each run only pulls bills modified since your last sync timestamp.

const XERO_API_BASE_ = 'https://api.xero.com/api.xro/2.0';

const XERO_BILLS_SHEET_ = 'Xero Bills';

/**

* Resolves the active tenant ID from Xero connections.

*

* @param {OAuth2.Service} service

* @returns {string}

*/

function getXeroTenantId_(service) {

const res = UrlFetchApp.fetch('https://api.xero.com/connections', {

method: 'get',

headers: { Authorization: `Bearer ${service.getAccessToken()}` },

muteHttpExceptions: true,

});

if (res.getResponseCode() !== 200) {

throw new Error(`Failed to resolve tenant. ${res.getResponseCode()} — ${res.getContentText()}`);

}

const tenants = JSON.parse(res.getContentText());

const tenantId = tenants?.[0]?.tenantId;

if (!tenantId) throw new Error('No tenant connected to this token.');

return tenantId;

}

/**

* Performs a JSON request to Xero with common headers.

*

* @param {"get"|"post"|"put"|"delete"} method

* @param {string} url

* @param {Object|null} body

* @param {{ ifModifiedSinceIso?: string }} [opts]

* @returns {{code: number, text: string, json?: Object}}

*/

function xeroJsonRequest_(method, url, body, opts = {}) {

const service = getXeroService_();

if (!service.hasAccess()) throw new Error('Xero access not granted. Run the authorization flow first.');

const tenantId = getXeroTenantId_(service);

/** @type {GoogleAppsScript.URL_Fetch.URLFetchRequestOptions} */

const options = {

method,

headers: {

Authorization: `Bearer ${service.getAccessToken()}`,

'xero-tenant-id': tenantId,

Accept: 'application/json',

...(opts.ifModifiedSinceIso ? { 'If-Modified-Since': opts.ifModifiedSinceIso } : {}),

},

muteHttpExceptions: true,

};

if (body != null) {

options.contentType = 'application/json';

options.payload = JSON.stringify(body);

}

const res = UrlFetchApp.fetch(url, options);

const code = res.getResponseCode();

const text = res.getContentText();

let json;

try {

json = text ? JSON.parse(text) : undefined;

} catch (e) {}

return { code, text, json };

}

/**

* Returns ACCPAY invoices (bills) from Xero.

*

* @param {{ ifModifiedSinceIso?: string }} [params]

* @returns {Array<Object>}

*/

function listBills_(params = {}) {

const where = encodeURIComponent('Type=="ACCPAY"');

const url = `${XERO_API_BASE_}/Invoices?where=${where}`;

const { code, text, json } = xeroJsonRequest_('get', url, null, {

ifModifiedSinceIso: params.ifModifiedSinceIso,

});

if (code !== 200) throw new Error(`Failed to list bills. ${code} — ${text}`);

return /** @type {Array<Object>} */ (json?.Invoices || []);

}

/**

* Upserts bills into a sheet using InvoiceID as the key.

*

* @param {SpreadsheetApp.Sheet} sheet

* @param {Array<Object>} bills

* @returns {void}

*/

function upsertBillsToSheet_(sheet, bills) {

const headers = [

'InvoiceID',

'InvoiceNumber',

'ContactName',

'Status',

'Date',

'DueDate',

'CurrencyCode',

'Total',

'AmountDue',

'UpdatedDateUTC',

];

const current = sheet.getDataRange().getValues();

if (!current.length || current[0].join() !== headers.join()) {

sheet.clear();

sheet.getRange(1, 1, 1, headers.length).setValues([headers]);

}

const data = sheet.getDataRange().getValues();

/** @type {Map<string, number>} */

const rowById = new Map();

for (let r = 2; r <= data.length; r++) {

const id = String(data[r - 1][0] || '');

if (id) rowById.set(id, r);

}

const rowsToAppend = [];

bills.forEach(b => {

const row = [

b.InvoiceID || '',

b.InvoiceNumber || '',

b.Contact?.Name || '',

b.Status || '',

b.Date || '',

b.DueDate || '',

b.CurrencyCode || '',

b.Total ?? '',

b.AmountDue ?? '',

b.UpdatedDateUTC || '',

];

const invoiceId = String(b.InvoiceID || '');

const existingRow = rowById.get(invoiceId);

if (existingRow) {

sheet.getRange(existingRow, 1, 1, headers.length).setValues([row]);

} else {

rowsToAppend.push(row);

}

});

if (rowsToAppend.length) {

sheet.getRange(sheet.getLastRow() + 1, 1, rowsToAppend.length, headers.length).setValues(rowsToAppend);

}

}

/**

* Main sync: pulls bills changed since last sync and writes them into Google Sheets.

*

* @returns {void}

*/

function syncXeroBillsToSheet() {

const ss = SpreadsheetApp.getActiveSpreadsheet();

const sheet = ss.getSheetByName(XERO_BILLS_SHEET_) || ss.insertSheet(XERO_BILLS_SHEET_);

const props = PropertiesService.getScriptProperties();

const lastSyncIso = props.getProperty(XERO_PROPS_.lastSyncIso) || '';

const bills = listBills_({ ifModifiedSinceIso: lastSyncIso || undefined });

upsertBillsToSheet_(sheet, bills);

props.setProperty(XERO_PROPS_.lastSyncIso, new Date().toISOString());

}

/**

* Creates an hourly time-driven trigger for syncXeroBillsToSheet().

*

* @returns {void}

*/

function installXeroBillsTrigger_() {

ScriptApp.newTrigger('syncXeroBillsToSheet')

.timeBased()

.everyHours(1)

.create();

}A clean first run is: save credentials → run logXeroAuthorizationUrl_() and approve → run syncXeroBillsToSheet() once → run installXeroBillsTrigger_() to automate the sync.

You can now install a simple time-driven trigger on syncXeroBillsToSheet() to keep your spreadsheet up to date automatically. From here, it’s easy to adapt the same structure to pull other Xero data: switch from bills (ACCPAY) to sales invoices (ACCREC), filter only paid documents, or add extra columns depending on what you want to analyse.